And similarly, I think one of the things that's really also exciting about AI is you can take the best experiences you could provide to your customer, whether it's a great sales experience or a great marketing conversation, and you can scale it.

I think that just imagining retraining your workforce on a methodology is painful. It's a slow and expensive process. Not only is it hard to do, you don't want to do it frequently.

Now with AI, if you discover a technique that works, either intuitively, or through some measured automation, you can actually deploy it instantly.

It enables not only a degree of empathy and personalization which was not possible before, but as a leader of a company and a brand ambassador, you can try things more quickly. Then when you find a magical moment, you can operationalize it.

—Bret Taylor, Chairman of the Board, OpenAI, founder of Sierra

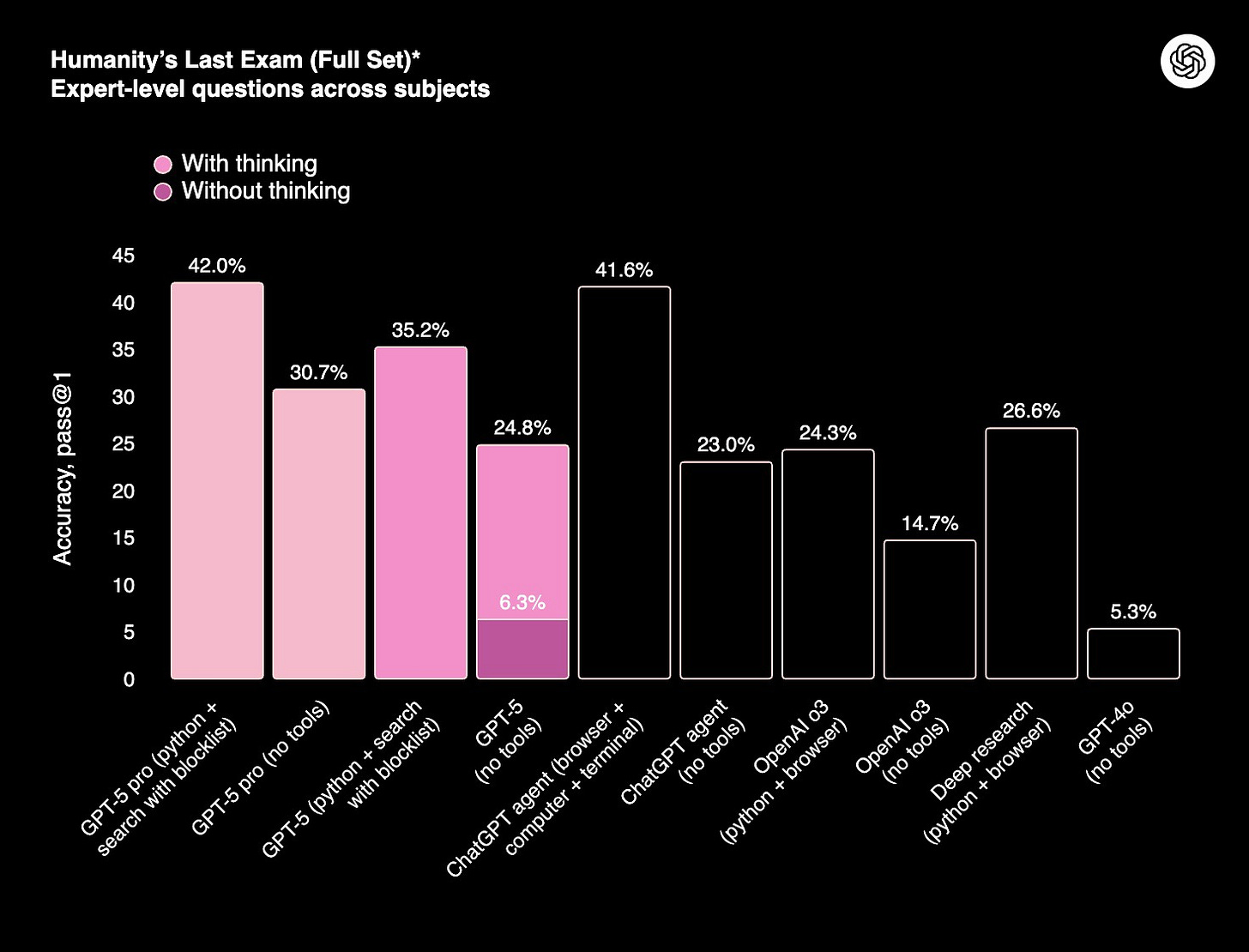

GPT-5 launched this past Thursday. Despite a scoring 94.6 percent on AIME 2025 and 74.9 percent on SWE-bench, it seems the general vibe was the model launch was a flop.

Mostly this sentiment seems to be coming from the fact that Grok 4 was a huge step function change from Grok 3.

By comparison, GPT-5 seems more iterative than groundbreaking.

And while that's true, it misses the point.

Most LLMs are absolutely blowing traditional benchmarks out of the water.

From winning the International Mathematical Olympiad by solving five out of six challenging problems under the same conditions as the top human contestants, to being trained and certified to take the bar exam, we’re reaching the end of the current benchmarks we have for measuring human intelligence.

Once a frontier has been climbed, its usefulness as a differentiator collapses. The upper bounds are reached, the gap between players closes, and everyone moves on. That is exactly what is happening right now among the recent model releases.

Large language models have mastered knowledge retrieval and reassembly. They organized and recombined existing information in ways that were fast, useful, and increasingly indistinguishable.

Whether you were using OpenAI, Anthropic, Gemini, or a top Chinese lab, the ability to pull the right fact and package it into coherent text has been converging toward parity.

In this world, being “better at retrieval” stopped meaning much. Everyone could retrieve. Everyone could write.

The new axis of competition will not be about what a model knows. It will be about what a model can do. GPT-5 represents a pivot point because it can act.

For example, it can decide which model to use for a given task.

For nerds like me, I would play around with each model to figure out what was best at what, and optimize accordingly between 4.o, 4.5, o3, and o3-mini-high.

For the normies, this was a frustrating and confusing product experience. Now the model parses your prompt and calls the correct model based on the complexity of your ask.

Tool calling is the bridge from static advisor to active agent. It patches two of the fundamental weaknesses that have limited pure language models since the beginning.

Workflow orchestration.

Language models are great at giving you a perfect one-off answer, but they fall apart in multi-step processes that require persistent state, error handling, and complex branching.

Tools let them track progress across dozens of steps. They can pause and wait for an API to respond, pick up where they left off, and recover from errors without human intervention.

System integration.

LLMs live in a text box, waiting for your prompt. Tools let them break out. A tool can make an API call, a database query, a function inside enterprise software. With tool calling, plain English becomes executable code.

In the past month I have built countless different tools for GPT-based systems. Email processors. Research assistants. Python scripters. Graphic designers. Podcast hosts.

Each one extends the reach of the model into a new domain of real work. When I tell the system, “read this trouble ticket and root cause the problem in five sentences” the tools execute a sequence of operations that would otherwise require a human to jump between multiple systems, copy data, check lists, and verify entries. One sentence replaces an entire workflow.

What will make this transformative is the self-verification loop. When set up correctly, the model does not just perform a “task-to-be-done”. It checks its own work, confirms that each step completed successfully, and flags any anomalies to the human in the loop. Reliability at scale depends on this feedback loop. Without it, complexity collapses into chaos.

Multiply that by hundreds of employees and thousands of workflows and the productivity gains stop being linear. They compound.

They compound because each successful automation frees up cognitive bandwidth for the humans in the loop to create higher level solutions and optimizations.

When designing systems, we often make the mistake of assuming that all humans are rational actors. In reality, they could be tired, emotional, mutinous, hungover, or self sabotaging.

Agents become predictable systems which can be root caused, improved, and run in parallel.

This is the skill that will matter most in the AI-powered economy.

Not prompt engineering in the old sense, not knowing which model has the highest benchmark score. But the discipline of orchestrating tools to automate and delegate what a model can do, to free you up to focus on the things only you can do.

The companies that master this will have a structural advantage. The individuals who master it will be the new operational elite.

Once these workflows are predictable, the human role shifts. You stop being an IC. You become a manager.

You stop being responsible for generating work product, and you start being accountable for the success and correctness of your workflows.

You oversee a network of automated actors, each tuned for a specific domain, each executing with speed and accuracy, each improving with every iteration.

GPT-5 is the first model that makes that role both viable and valuable. The next generation will make it unavoidable.

If the last era was defined by who could retrieve knowledge the fastest. The next will be defined by who can deploy it the most effectively. That is the real revolution GPT-5 has started.

It is very interesting to see how fast the models have developed. The slope of the learning curve for these models was quite high and is now leveling off. Accuracy and ease of use will be the new kid in town. Let’s hope these models know when they have it right and don’t institute a “clippy” to help everyone out.