Beyond The Scaling Laws

New Opportunities & Challenges

Hey folks, Agora is now officially a podcast. Each article will have a supplemental podcast episode which explores the same topic, but from a different vantage point.

Check it out on Spotify:

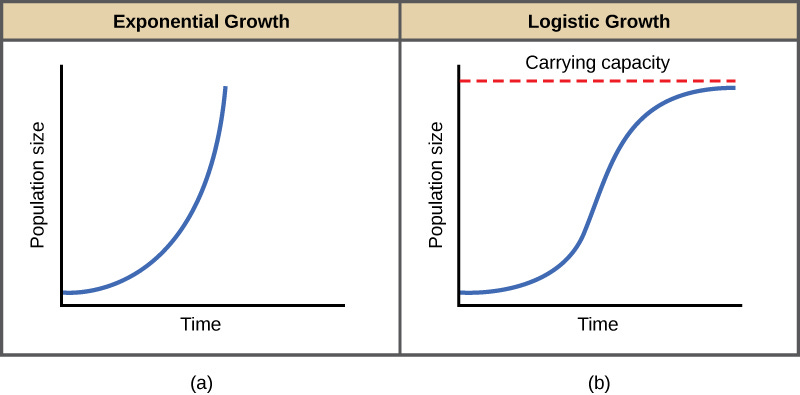

“Scaling laws are S-curves, not exponentials.”

—Pedro Domingos, Professor of Computer Science at University of Washington

The AI industry is experiencing a pivotal moment as traditional scaling methods reach their limits.

The long-held belief that increasing data, compute power, and model size would linearly enhance AI capabilities is under scrutiny.

Recent models like OpenAI's Orion and Google's Gemini have underperformed, despite substantial resource investment, highlighting the diminishing returns of brute-force scaling.

This is not altogether surprising, if you know how an LLM works.

To give a very brief refresher:

Generative Pretrained Transformers (GPTs)

Transformer architecture is what underlies almost all of the new “artificial intelligence” consumer products today. (Chat GPT, Claude, Llama, Grok, etc.)

They are generative, meaning they do not simply retrieve stored information, but rather, generate new information based on data they have been trained on.

They are pretrained, meaning they have been trained using reinforcement learning on huge corpuses of data.

And they utilize transformer architecture, meaning the transformer allows the model to weigh the significance of different words relative to each other, which allows it to predict what word should come next.

As I’ve discussed before in my article What Comes After LLMs, there are three primary barriers that remain to further progress in AI.

Not enough data

Not enough compute

The limits of the transformer architecture

In response to these challenges, the AI field is shifting to new paradigms that move beyond mere scaling.

E Pluribus Unum

Techniques like test-time compute, exemplified by OpenAI's o1 model, enhance reasoning during the inference stage, rather than relying on memorization during training.

Alternative architectures such as state space models and RWKV address the specific limitations of transformers, particularly in handling long-term dependencies.

Additionally, there's a growing interest in embodied AI and "world models" that enable understanding of causality and physical interactions.

This shift signifies a more pluralistic future for AI research, where diverse approaches could lead to more robust and sustainable progress.

By embracing multiple methodologies, the field aims to overcome current limitations and open new pathways to achieving a more generalized intelligence.

This transition reflects a maturation of the AI landscape, moving away from the singular focus on scaling.

Cyclical

“Cold things grow hot, the hot cools, the wet dries, the parched moistens.

We both step, and do not step in the same rivers. We are, and are not”

—Heraclitus, Greek Philosopher, 530 to 470 BCE

Parallel to these technological shifts, the venture capital industry is adapting to a new economic landscape marked by uncertainty.

A majority of fund managers report a challenging fundraising climate, compounded by a series of economic and geopolitical crises. This sustained uncertainty impacts both venture capital firms and their portfolio companies.

Operating in a "permanent crisis mode," venture capitalists face challenges such as fundraising difficulties, geopolitical tensions, and evolving LP expectations. This environment necessitates a reevaluation of investment strategies and focus areas.

Additionally, there's a significant scale-up financing gap for European companies looking to expand operations, affecting exit opportunities and overall market growth. Addressing this structural weakness is essential for the industry's continued vitality.

Perhaps the most significant trend, as we close out 2024, is the shift away traditional software investments (SaaS).

Venture capital firms have recognized and reevaluated substantial value creation potential in industries with large GDP contributions, such as energy, insurance, supply chain, and retail.

In essence, there is a major shift in investor sentiment from the world of bits, to the world of atoms.

Investing in these sectors requires adapting to different business models, valuation metrics, and the crucial role of services in generating value.

The shift reflects an understanding that applying AI in these industries could unlock significant opportunities, especially if done in a way that reduces bloating operating expenses.

Most of the money is going to Seed and Series A companies, which investors feel have the potential to disrupt traditional practices and drive significant growth.

“Your margin is my opportunity.”

—Jeff Bezos

As economic uncertainties potentially decrease, with the results of the Presidential election confirmed with a clear mandate, the next challenge GPs face is finding companies that have the potential for sustained growth, rather than relying on a power law, unicorn outcome.

Unicorns are great, but if there’s one thing ZIRP taught us, it’s you can go from a Unicorn to a Zombie-corn as quick as you can say “funding environment”.

What’s Next?

The tech industry is evolving beyond a singular focus on the AI scaling laws or the Transformer.

With the reverse hockey stick of model scaling leveling off into more of an S curve, the best thing we can do is promote open source model technology to continue to accelerate AI research and advancement.

Bridging the scale-up financing gap is crucial, especially for companies aiming to expand operations and compete on a global scale.

Biotech and healthcare continue to present growth and innovation opportunities, while deeptech and cybersecurity gain importance with the rising need for advanced technologies and data protection.

While fundraising complexities present obstacles, firms continue to invest in promising areas with an emphasis on early-stage ventures and strong management teams.

Addressing structural weaknesses and promoting cognitive diversity remain pressing issues that the industry must tackle to ensure sustained growth and innovation in an increasingly complex global market.

With the new administration entering in January, and M&A back on the table, the markets, both public and private, are in an excellent position to rip.

The vibes are cautiously optimism for those in a position to see where the puck is headed, and follow it to where the value will be created.

Well done Matthew! Have a great weekend👍🖖

Really interesting thoughts on the growth of AI with potential insights into the possible future competition that could arise - even not strictly connected to Big Tech per se. Thanks Matthew for sharing.