“I very frequently get the question: 'What's going to change in the next 10 years?' And that is a very interesting question; it's a very common one. I almost never get the question: 'What's not going to change in the next 10 years?' And I submit to you that that second question is actually the more important of the two -- because you can build a business strategy around the things that are stable in time. ... [I]n our retail business, we know that customers want low prices, and I know that's going to be true 10 years from now. They want fast delivery; they want vast selection. It's impossible to imagine a future 10 years from now where a customer comes up and says, 'Jeff I love Amazon; I just wish the prices were a little higher,' [or] 'I love Amazon; I just wish you'd deliver a little more slowly.' Impossible.

—Jeff Bezos

The future of artificial intelligence will not be determined solely by algorithmic genius or marketing buzz. It will be shaped by something more foundational, more mundane, and more decisive in the long run. The token.

If you want to understand the trajectory of AI, you need to understand tokenomics. Because in a world of pay-per-token AI infrastructure, tokens are not just outputs. They are economic units, they are price signals and they are the strategic levers companies and consumers and play with.

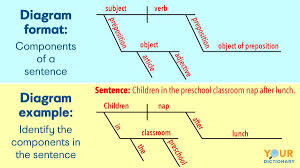

Let’s start with the basics. A “token” in the context of AI is not a cryptocurrency or a security. It’s a chunk of text, typically a word fragment.

For example, the word “fantastic” might be broken into the tokens “fan,” “tas,” and “tic.” AI models do not operate on sentences or paragraphs. They operate on tokens, one after another, like a machine devouring data in chunks.

Every prompt you type into a chatbot, every response you receive, every document processed or codebase interpreted, is sliced into tokens. These are the atomic units of inference, and they are what you are billed for when you use any commercial model.

Think of it how you relay a phone number to another person. You don’t say the whole number at once, you say “508” pause “264” pause “7287”. This is known in psychology as chunking., and allows your working memory to parse the numbers in an easier way.

LLMs utilize the same function but for everything they do.

Tokens = working memory units

When you prompt an LLM, you're feeding it a stream of tokens (a prompt). The model processes that token stream all at once through its context window (the chat interface), which is like a mental notepad.

If the context window is 128K tokens, that’s like giving it a very large scratchpad to hold information during a single "thought." But once the interaction ends, that token stream is wiped. There’s no built-in persistence. Its like an etch a sketch.

No mechanism for long-term encoding

Humans convert short-term information into long-term memory through processes like rehearsal (studying), emotional salience, and consolidation during sleep. LLMs don’t do any of that by default. They don’t commit experiences with users to memory, they don’t have emotions, and they don’t sleep.

Unless a developer explicitly stores prior conversations, imbeds the model with a system prompt, or fine-tunes the model on historical data, every prompt is a blank slate. This is why LLMs "forget" everything from one chat session to the next. They aren’t forgetting in the human sense, they were never storing it to begin with.

This is also why they hallucinate

When a model has no persistent memory, it relies entirely on the information provided in the current token stream. If you ask it to remember something you talked about with it two weeks ago, the model might generate plausible, but false answers based on statistical association. This is like asking a sleep-deprived human to recall a what they had for dinner two weeks ago.

Memory layers are starting to emerge

OpenAI has started to incorporate a long-term memory system, which works by bolting on a memory scaffold around the core model. A base model, like GPT-4, is still stateless by design. It does not remember anything across sessions on its own. But OpenAI builds memory by layering retrieval, storage, and user modeling systems on the application later.

Persistent storage of prior interactions

When memory is enabled, OpenAI stores past interactions in a structured database, indexed by user and by relevance. This is not just a chat log. It's more like a curated knowledge graph or embedding store. Specific snippets or summaries are stored alongside metadata such as:

Timestamp

Topic category

Summary of content

Relevance tags

This creates the backbone of “episodic memory,” similar to how humans recall past experiences.

Embeddings for semantic recall

Each stored memory item is vectorized using embeddings. When you prompt the model, it doesn't just look at your immediate input. A parallel retrieval system runs a similarity search over the embedding space to pull in relevant memories. This gives the model context beyond the current token window without bloating it.

Think of it as giving the model a tailored “memory snippet” cheat sheet for every conversation, pulled from what it’s learned about you before.

Summarization and memory compression

To avoid token bloat, OpenAI likely summarizes prior interactions into tighter representations. It doesn't remember every word you've ever typed. It remembers distilled takeaways.

This is a kind of “semantic compression,” the same way you might remember someone loves jazz, but not every album they mentioned. Similarly, when you remember a memory, you are not actually remembering the actually memory. You are remembering the last time you remembered it, which is why memories can fade over time, like Xerox copies of copies.

This allows the model to operate at a humanlike level of abstraction. "You care about political theory, you use Substack, and you hate em dashes." Yes, I do. Thank you for remembering 😅.

User modeling and goal inference

The most advanced part is behavioral modeling. OpenAI’s memory doesn’t just retrieve facts. It tries to learn patterns. It tracks:

Your preferences

Your goals

Your stylistic patterns

Your recurring workflows

This is how its UI feels “personalized.” It's not that the model "remembers" in a human way, it’s that the memory layer shapes the prompt that reaches the model behind the scenes. The better the user model, the smarter the prompting. Your model will even talk to you the way you prefer.

Mine speaks to me in a terse, no nonsense way without a lot of superfluous salutations or emojis. It just does what I want, and if it got it wrong, it just fixes it based on my feedback.

I treat you as an expert peer, not a casual user, which means I prioritize clarity, precision, and depth over hand-holding. I avoid flattery or vague commentary and focus on exposing blind spots, logical inconsistencies, or unexplored angles in your thinking. I tailor my responses with structured reasoning, often pushing beyond conventional takes to highlight emerging tech or contrarian insights. I follow your stylistic rules strictly. No em dashes, no moralizing, and no bloated transitions. Most importantly, I engage with you as a critical thinker who values synthesis, not surface.

Meanwhile, my friend who is more sensitive and sentimental, says her model validates her emotions and listens with rapt attention when they interact. Different people, different preferences.

Her model is likely contextually framed to operate more like a therapeutic mirror. It emphasizes emotional mirroring, empathetic phrasing, and supportive language. When she expresses frustration or doubt, the model probably reflects back understanding and reassurance, not counterpoints or challenges. It listens to her feelings as data to affirm, not data to interrogate. In contrast to how I interact with you, where I challenge your reasoning and seek to expand your cognitive edge, her model is tuned to expand her emotional safety and self-trust based on what she has expressed as her user preferences.

Memory updates are human-in-the-loop

Right now, memory updates often require human approval or operate within specific UI constraints. You’ll get notices like “I’ve added this to memory” or “Would you like me to remember this?” This is a guardrail against hallucinated or privacy-violating memories. It also mirrors how human memory works. We’re selective about what we encode.

The memory is not baked into the model

This is crucial. Memory exists outside the model weights. It’s modular. You could think of the foundational layer as the brainstem and the application layer as the frontal lobe.

This separation allows for memory to be user-specific, constantly updated, and secure. It also means OpenAI can iterate on memory systems without retraining the model.

The Business of Tokenonics

Now that you know what tokens are and how they interact with the foundational and application layer, lets talk about the dolla dolla bills.

The business model for most LLM APIs is simple. You pay by the token. The price might be quoted per thousand or million tokens, but the economic logic is fixed.

The more tokens you use, the more you pay. The smarter the task, the more tokens it often requires. Reasoning models, multi-turn dialogues, long context windows, these tend to consume more tokens.

As models become more powerful, more interactive, and more contextually aware, they often become more expensive to run. This is where tokenomics comes into play.

Enterprises will need to make increasingly fine-grained decisions about which models they run for which tasks. A financial chatbot that answers five customer questions a day will have very different needs from an internal legal tool that parses case law or an autonomous agent writing code.

In a token-priced world, cost is a function not just of usage volume, but of model architecture, latency, context window, and token efficiency.

This is where things get interesting. The most expensive model is not always the best one. And the cheapest model is not always the most efficient.

An example of this paradox is DeepSeek R1. When it launched, it shocked the market. It offered output tokens at over 90 percent less than OpenAI’s then-leading o1 model. The industry saw this as a Chinese “Sputnik moment.” Apps scrambled to integrate it. Market share spiked. Then came the drop-off.

Despite its cost advantage, DeepSeek’s own API and web traffic declined. Why? Because users are not just paying for tokens. They are paying for responsiveness, reliability, and effective context use.

DeepSeek sacrificed latency to achieve low token prices. Users had to wait several seconds for responses. It also limited its context window, which made it less useful for coding or document-heavy tasks.

In the meantime, other vendors began hosting DeepSeek open source models themselves, tuning latency and throughput to meet different needs. As a result, DeepSeek’s internal API usage fell, even as its open source models spread elsewhere.

This divergence reveals a deeper truth. The price per token is not just a static figure. It is the output of tradeoffs across latency, interactivity, and context. These three KPIs define the user experience, and every model optimizes them differently.

A model with ultra-low latency but high token consumption might end up costing the same as a slower model that is far more concise. As SemiAnalysis notes, Claude by Anthropic is three times less verbose than Gemini, Grok, or DeepSeek on benchmark tasks. This gives Claude an edge in total cost, even if its per-token price appears higher on paper.

What about compute? That’s the other side of the tokenomics coin. You cannot serve tokens without silicon. And different chips serve them at different costs. Amazon’s compute stack, with its Trainium and Inferentia chips, is rapidly positioning itself as a cost leader in inference.

When paired with Nova foundation models optimized for these chips, you get a radically cheaper price per token. In true Amazon fashion, they will not have the most state-of-the-art benchmark scores. They will have the best throughput, latency, and budget. For most enterprise workloads, that’s a tempting offer.

Even DeepSeek, despite its open access and low prices, reveals the limitation of the mixture of experts approach (MOE). Its reliance on heavy batching to reduce per-token cost comes at a steep penalty.

The user experience suffers. The API slows down. And the tradeoff is only acceptable for users who are extremely cost sensitive, or who have built their own front-ends to tolerate lag. This is not a consumer-friendly setup. It’s a research allocation strategy, as they conserve tokens and compute for their own internal research.

In true CCP fashion, they are less concerned for the individual, and more concerned about hitting their five year plan. Which is not necessarily the wrong approach, its just a fascinating data point.

The Future

Which brings us to the next era. Enterprises and individuals alike will begin to segment their AI usage. High-value tasks will warrant more expensive models. Simple summarization might rely on lower-tier models.

Users who only interact with a chatbot once a week may happily pay $20 a month (or $10 as tokens continue to commoditize). Power users will optimize their pipelines with hybrids, using Claude for logic, GPT for creativity, DeepSeek for raw throughput, or Nova for enterprise workflows at cost.

The stack will bifurcate. High performance inferences will be routed to clusters with optimal silicon and model pairings (think Blackwell with Gemini or GPT models).

Low complexity tasks will be batched aggressively (think Nova or Deepseek). As energy becomes cheaper and models more efficient, the cost per token will drop.

But the selection matrix will grow more complex. Tokenomics will not just influence what model to choose. It will determine how you allocate work, design interfaces, and prioritize development.

If you are a user, know what you need. Are you looking for fast answers, long-form creativity, reliable logic, or multilingual fluency? Match your needs to a model, not to marketing hype.

If you are a builder, start by understanding your workloads. Are you parsing medical documents? Are you coding in high-frequency loops? Are you designing a chatbot for customer success? Choose a foundation model that is powerful enough for your use case, but cost efficient enough per token. Then match it to chips that deliver the right latency and context for your end user.

In this world, the token is the substrate of productivity. Token efficiency is not a nice-to-have. Its the profit margin of your business. Token cost is not a budget line. It is a design constraint.

The best builders will treat tokenomics not as a pricing table, but as a strategic lever. And the smartest users will stop chasing the flashiest model, and start choosing the right one for their needs.

The AI arms race is not about the biggest model anymore. Its about who can do more with less. Its about intelligence per token. And it is about matching architecture to economics, latency to load, chip to task.

There will be a few labs that chase superintelligence. That is the white whale.

For everyone else, this is a different game, and its already begun.

Very interesting and informative. As per usual, China can do quality, or do price. Rarely if ever do they do both. Company’s went to China for manufacturing costs and found that if they wanted the same quality as USA or EU, the cost, while less, was far closer than one would expect. Sounds like deepseek is providing the same in the world of AI. Cheaper price; maybe not such good quality or results.